In this blog post we will see how Cortana skills can play short audio clips and compose the Text-to-Speech(TTS) responses that Cortana speaks on behalf of your skill. We will leverage the SSML support in the Cortana Skills Kit to achieve this.

If you haven’t already, do check out our first post on creating your Cortana skill and the second on personalizing it as per your skill context.

SSML is an XML-based markup language that skill developers can use to specify the speech text that Cortana translates to speech. Using SSML improves the quality of synthesized content over sending Cortana plain text. Cortana’s implementation of SSML is based on World Wide Web Consortium’s Speech Synthesis Markup Language Version 1.0. Check out the complete documentation here on how you can leverage SSML in your skill.

In this blog post, we will specifically focus on using the SSML audio tag in a Cortana Skill to play audio as part of the speech response. This tag can be used in a variety of skill response scenarios – like playing existing audio snippets, responding to user request using music, playing music for the user as a progress indicator while performing a long running operation, etc.

Let’s begin!

(Go through Step 1 to 3 from our first blog post if you have never built a skill before. Follow-on when you are finishing up Step 4 there.)

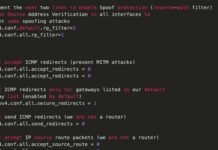

Change the switch-case in the Run method in run.csx file to:

switch (activity.GetActivityType())

{

case ActivityTypes.Message:

var client = new ConnectorClient(new Uri(activity.ServiceUrl));

var reply = activity.CreateReply();

reply.Text = "Hello World! Now playing a sample mp3 file using SSML.";

reply.Speak = @"<speak version=""1.0"" xml:lang=""en-US"">Hello World! Testing a sample mp3 file. <audio src=""https://www.sample-videos.com/audio/mp3/crowd-cheering.mp3""/> </speak>";

await client.Conversations.ReplyToActivityAsync(reply);

break;

case ActivityTypes.ContactRelationUpdate:

case ActivityTypes.Typing:

case ActivityTypes.DeleteUserData:

case ActivityTypes.Ping:

default:

log.Error($"Unknown activity type ignored: {activity.GetActivityType()}");

break;

}

You will see that we used the <speak> element to enclose our SSML markup. It is the root element of an SSML based response and all individual elements that you can use to compose the response (like the audio tag used here) need to be enclosed within the <speak> element.

For a full list of elements you can leverage, check out the documentation here.

In this example, we are using the <audio> element to specify the file we want to play in our response. You need to ensure that the audio file you want to play via SSML needs to be:

- MP3 format (MPEG v2, Bit rate 48kbps, sample rate 16000 Hz)

- Hosted on an Internet-accessible HTTPS endpoint.

- Less than ninety (90) seconds in length

What to do when you have a longer audio stream? Good question and an apt topic for our next blog post. 🙂

For now, save the file and test your Cortana skill. Check out Step 4 & 5 from the first blog post on instructions on how to invoke your skill.

You should be able to hear the audio clip play out as part the spoken response from Cortana when you invoke the skill.

Now, let’s go ahead and try another SSML element to see how we can make Cortana pause once she says “Hello World!”. Edit the <speak> element to use the <break> element.

<speak version=""1.0"" xml:lang=""en-US"">Hello World! <break time=""500ms"" /> Testing a sample mp3 file. It works! <audio src=""http://www.sample-videos.com/audio/mp3/crowd-cheering.mp3""/> </speak>

Here, we are asking Cortana to pause for 500ms before saying the next word.

Save the file and test by invoking your skill on Cortana.

You can do much more using other elements like the <prosody>, <phoneme> and <say-as> elements. Refer to the documentation for usage details of these and other tags.

Please let us know below if you have any questions. We would also love to hear about specific topics for blogs that you would like to see and we would be happy to oblige!

Have a great day!